Hosting a simple Code Editor on S3

I got this old code editor project sitting in github without much description - repo link. So I thought why not try to host it on S3 so I could showcase it in the repo.

Also it’s a good pratice to brush up my knowledge on some of the AWS services (S3, CloudFront, Route53). After almost an hour, I got the site up so it’s not too bad. Below are the steps that I took.

Create a S3 bucket and upload my code to this new bucket - ceditor.tdinvoke.net.

Enable “Static website hosting” on the bucket

Create a web CloudFront without following settings (the rest are set with default)

- Origin Domain Name: endpoint url in S3 ceditor.tdinvoke.net ‘Static Website Hosting’

- Alternate Domain Names (CNAMEs): codeplayer.tdinvoke.net

- Viewer Protocol Policy: Redirect HTTP to HTTPS

- SSL Certificate: Custom SSL Certificate - reference my existing SSL certificate

Create new A record in Route 53 and point it to the new CloudFront Distributions

Aaand here is the site: https://codeplayer.tdinvoke.net/

Next I need to go back to the repo and write up a readme.md for it.

Get AWS IAM credentials report script

Quick powershell script to generate and save AWS IAM credentials report to csv format on a local location.

1 | Import-Module AWSPowerShell |

How to verify google search with route53

Just recently got this site on google search, totally forgot about it when I created the site.

The process is quite easy. Follow the instructions on this link should cover the task.

Might take from 10 minutes to 5 hours for the TXT record to populate, so be patient!

How to find SCOM DB server

If Microsoft SQL mp is not available on your SCOM or the SCOM DB SQL Server is not discovered.

On SCOM management server regedit, navigate to

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft Operations Manager\3.0\Setup\DatabaseServerName

winhttp proxy command

Command to set windows server httpwin proxy setting.

1 | netsh winhttp set proxy proxy-server="http=<proxy>:<port>;https=<proxy>:<port>" bypass-list="<local>;<url>" |

Powershell script to grab winhttp value

1 | $ProxyConfig = netsh winhttp show proxy |

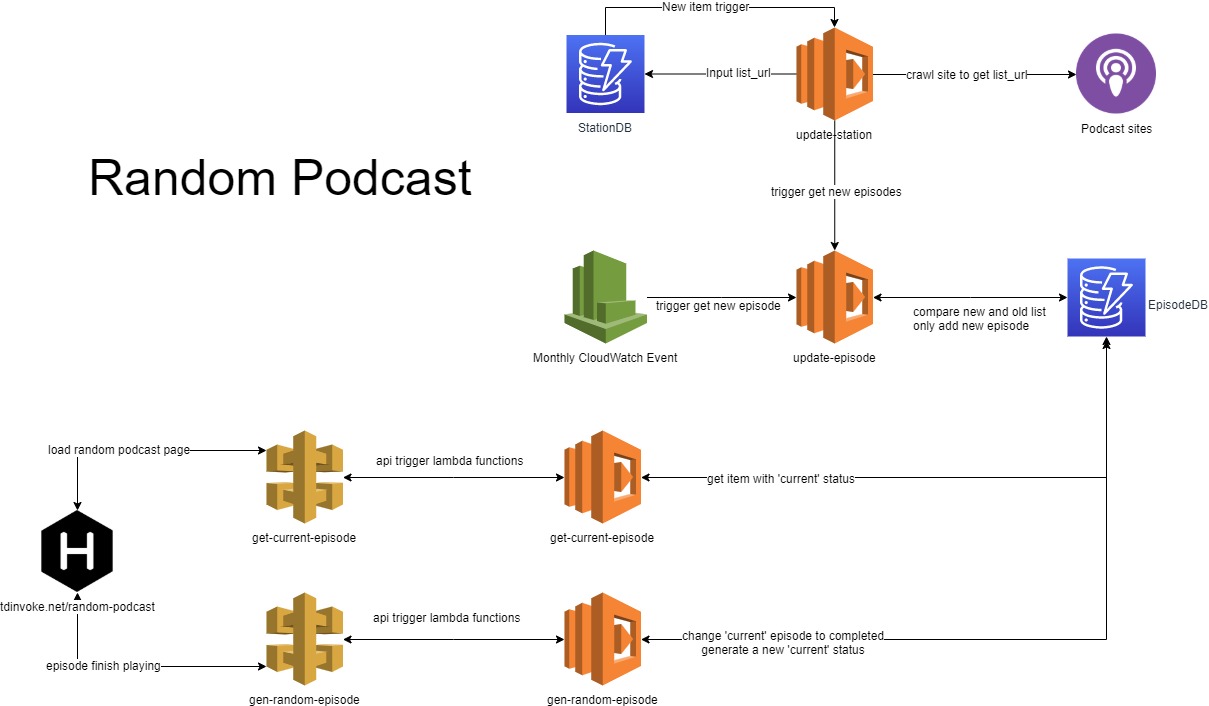

My random podcast app

I’ve been trying to catch up with a few podcasts and can’t decide what to listen to first. So I thought, let create an app that could pick out a random episode for me. Less thinking about picking and more time listening!

So here is what I came up with.

I thought it would be straight forward but it took me the whole weekend to get it up T__T

There are 4 lambda functions in this app.

1- update-station: trigger whenever a new item is added to stationsDB. This will crawl the site main page to get episode playlist and insert that back to stationsDB as list_url

2- update-episode: trigger by update-station function or a monthly cloudwatch event. This function will loop through the stationsdb and run the item’s spider fucntion on its list_url. The output would be a list of 50 most recent episodes for each stations. This list would then get compare with all episodes added to episodesDB. The differences would then get added to episodesDB

3- gen-random-episode: trigger by api gateway when an episode is finished playing at https://blog.tdinvoke.net/random-podcast/. This funciton would first change the current episode status ‘completed’. Then it would pull out all episodes url from episodeDB that haven’t play (with blank status). Random pick out 1 episode and change its status to current.

4- get-current-episode: trigger by api gateway when the page https://blog.tdinvoke.net/random-podcast/ is loaded. This one is simple, pull episode with ‘current’ status.

You can find the codes here

To see the app in action, please visit here

Issues encountered/thoughts:

- Add a UI page to modify the station DB. I’ll have to workout how to put authorisation in API call to add new station.

- Split crawler functions into separate lambda functions which make the functions clean and easy to manage.

- Add more crawler.At the moment, this app only crawl playerfm stations.

- Learnt how to add js scripts to Hexo. There arn’t much information on how to it out there. I had to hack around for awhile. Basically, I need to create a new script folder at thems/‘my-theme’/source/‘td-podcast’. Chuck all my js scripts in there, then modify ‘_partials/scripts.ejs’ to reference the source folder. Learnt a bit of ejs as well.

- Chalice doesn’t have Dynamodb stream trigger, gave up halfway and gone back to create the lambda functions manually.

- Looking into SAM and CloudFormation to do CI/CD on this.

- Could turn this into youtube/twitch random video. Looking into Youtube Google api and Twitch api.

AWS WAF automations

A friend of mine suggested that I should write something about AWS WAF security automations. This is mentioned in the Use AWS WAF to Mitigate OWASP’s Top 10 Web Application Vulnerabilities whitepaper and there are plenty of materials about this solution on the net. So I thought, instead of writing about what it is / how to set it up, let have some funs ddos my own site and actually see how it works.

I’m going to try to break my site with 3 different methods.

1. http flood attack

My weapon of choice is PyFlooder.

After about 5000 requests, the lambda function started to kick in and blocked my access to the side. I can also see my ip has been blocked on WAF http flood rule.

I then removed the ip from the blocked list and onto the next attack.

2. XSS

Next up is XSS, input a simple <script> tag on to the uri and I got 403 error straight away.

3. Badbot

For this method I used scrapy. Wrote a short spider script to crawl my site, targeting the honeypot url.

1 | import scrapy |

Release the spider!!!!

and got the 403 error as expected.

Issues encountered/thoughts:

Setting up the bot wasn’t easy as I expected, but I learnt a lot about scrapy.

I accidentally/unknowingly deleted the badbot ip list from the badbot rule. Only found out about the silly mistake by going through the whole pipeline (api gateway -> lambda -> waf ip list -> waf rule) to troubleshoot the issue.

PyFlooder is not compatible with windows os. Had to spin up a ubuntu vm to run it.

Learnt how to add file to source for Hexo. Not complicated at all, just chuck the file into /source folder. Do not use the hexo-generator-robotstxt plugin, I almost broken my site because of it.

Overall this was an interesting exercise - breaking is always more fun than building!

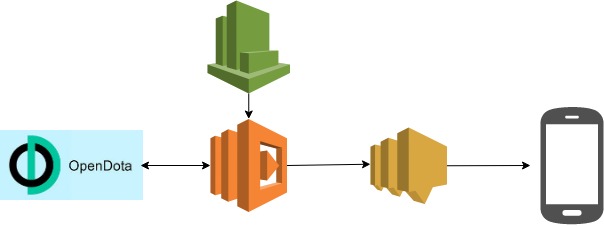

TI9 - Follow my fav team with opendota api, lambda and sns

It’s Dota season of the year, The International 9, the biggest esport event on the planet. So I thought I should make a project relate to this event - a notification function that notify me on my favorite team matches.

This function uses opendota api, aws lambda, cloudwatch and sns. Below is a high level design of the function I put together:

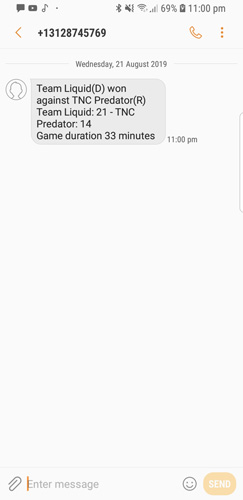

Lambda funcion is set to run every 1 hour trigger by CloudWatch. If the function found my favorite team just finish their match, it will sms me the result. Below is the lambda python code and a screen shot of a sms message.

1 | import os |

Things could be improved:

- set up full CI/CD

- CloudWatch schedule time to only run when the matches are happenning not 24/7

- UI to select favorite team or hook up with Steam account favorite team

I’ll comeback on another day to work on this. Got to go watch the game now…

Let’s go Liquid!

Create my own VPN

Just created my personal VPN by using the OpenVPN AMI from AWS Marketplace so I could trick Netflix to show movies from the US netflix region (Link on how to create the VPN) Below is the differences between AU and US when I search for Marvel.

Netflix au with a search on “Marvel”

Netflix us with a search on “Marvel”

I was closed but no cigar. Got the below when I tried to watch Infinity War. Damn Netflix! :(

netflix.com/proxy

Setting the vpn was quite straight forward. I was bit confused with the password. Eventually I figured out that I needed to run the password reset as:

sudo passwd openvpn

Same username declared when init OpenVPN, not the root user (openvpnas).

To clean up just terminate the ec2, also don’t forget to disassociate and release the Elastic IP Address.

Serverless Blog

So this blog is serverless using combination of hexo, s3, github, codebuild, route53 and cloudfront. My original plan was to build the blog from the ground up with lambda chalice, dynamodb and some hacking with java script. But I thought there got to be someone with the same idea somewhere. One search on google and found two wonderful guides from hackernoon and greengocloud. Thanks to the guides I was able to spin this up within 4-5 hours. I’m still getting use to Hexo and markdown but feeling pretty good that I got it working.

I was struggling a bit with git, the theme didn’t get committed properly. Removed Git Submodule sorted the issue out.

Also CodeBuild didn’t play nice with default role, got to give the role fullS3access to the bucket. It’s working like charm now.

PS: This blog use Chan theme by denjones